- Victor Adedayo, a Ph.D. student in Applied Linguistics at Oklahoma State University, critiques the UTME’s Use of English, calling it ineffective and unfair

- He explains how the exam lacks reliability, construct validity, and authenticity, thus failing to prepare students for real-world academic language demands

- Victor recommends reforms, including redesigning the test for equity, improving reliability, and tailoring content to reflect diverse academic backgrounds

Victor Adedayo is a Ph.D. student in the United States. Before leaving Nigeria, he was an English language tutor at a Nigerian secondary school, and he worked as a language content specialist and a user experience researcher.

In this interview with LEGIT.ng, the doctoral student talks about education in Nigeria, and how UTME’s Use of English is both ineffective and unfair to many test-takers.

Can you tell us about yourself?

My name is Victor Adedayo. I am a language enthusiast, teacher, and researcher from Kwara State, Nigeria. I am a second-year PhD student in Applied Linguistics at Oklahoma State University in the US, and also the assistant director of the University Writing Center.

In terms of academic pursuit, I am drawn to corpus linguistics, natural language processing, and discourse analysis. When I’m not doing linguistic stuff, you’ll find me watching Nigerian movies, soccer, or reading to my son. After my PhD, I’d love to continue teaching, researching, and leveraging my work to advocate for equity.

You are currently a Ph.D. student in Applied Linguistics, and your research highlights important educational issues in different contexts. Can you tell us more about your research?

My research focuses on how language is used to reinforce or challenge racial inequities. Specifically, I employ a corpus-based critical discourse approach to investigate how language legitimizes or delegitimizes different social groups. This work allows me to explore the intersections of language, power, and social justice in various contexts, particularly in higher education.

Can you explain better what is a corpus-based critical discourse approach?

The term “corpus” refers to a body of text and corpus linguistics is a branch of linguistics that involves the analysis of linguistic data stored in a corpus. On the other hand, critical discourse analysis (CDA) is a social approach that examines social inequalities that may arise from language use. So my research combines these two approaches to investigate how language is used to perpetuate or challenge social inequity, especially in higher education. In fact, I will be presenting one of my research on “Metaphor in university responses to anti-black violence” at the AAAL conference, the biggest conference for Applied Linguists in the US.

Speaking of social inequity, what are your thoughts on its presence in the Nigerian educational system?

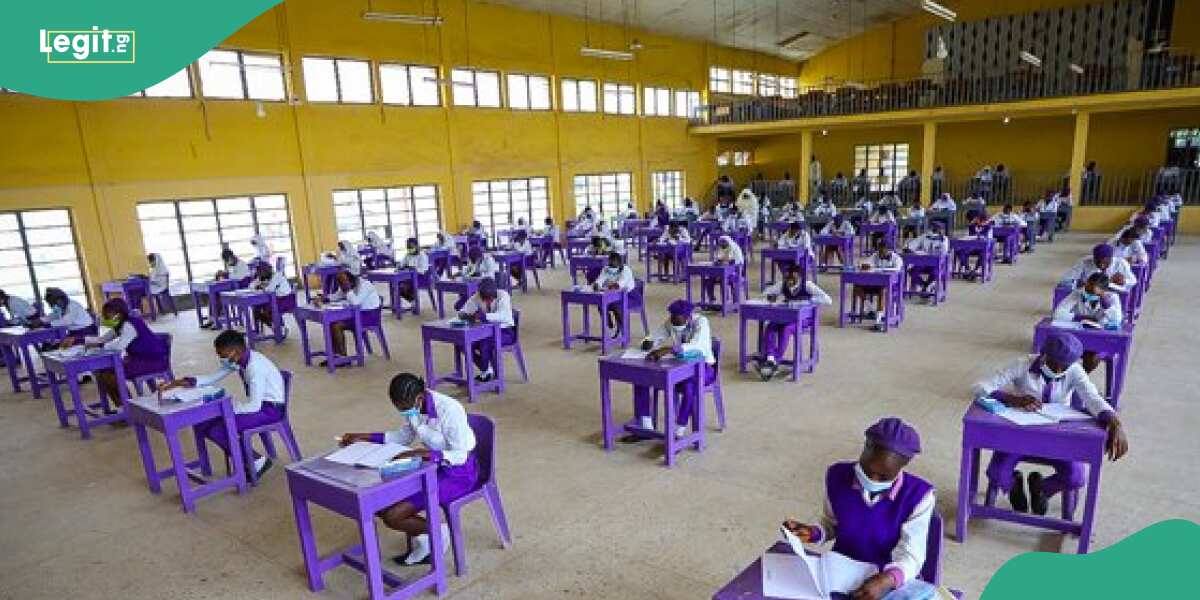

From my perspective as an applied linguist, the Nigerian educational system still reflects colonial structures and perpetuates social inequities. For instance, our classrooms often adopt deficit ideologies that demonize Nigeria’s linguistic diversity while prioritizing British linguistic standards. These practices are reinforced through high-stakes national exams like the UTME. In my opinion, UTME’s Use of English is both ineffective and unfair to many test-takers.

Why do you consider the UTME’s Use of English ineffective and unfair?

Let’s do a brief analysis of the exam based on the six qualities of a useful test proposed by Bachman and Palmer (1996): reliability, construct validity, authenticity, interactiveness, impact, and practicality. These criteria help determine whether a test is both effective and fair. For an exam like the UTME, which influences the educational trajectories of millions of students annually, it is important to assess its usefulness and confirm how equitable and meaningful it is.

How does the UTME’s Use of English fare in terms of reliability?

A reliable test produces consistent scores regardless of the testing situation or conditions. Therefore, if UTME’s Use of English is administered to a group of students in two different settings or with two or three different question types, it should not make any difference. Although UTME has a consistent scoring procedure in the sense that the questions are multiple-choice and the preprogrammed answers remain consistent, the reliability of the exam is still questionable. The testing body does not provide any convincing metric to show that irrespective of the testing situation across the 793 Computer-Based Test centers in the country, the test result is consistent.

How does the UTME’s Use of English fare in terms of construct validity?

Construct validity examines whether a test measures what it claims to measure. The UTME’s Use of English is a discrete-point test that focuses on isolated language skills, such as comprehension and summary, lexis and structure, and oral proficiency. The problem with this is that candidates will never use language in such an isolated way in their target language use domain (TLU) which is the university. While the exam may be measuring the constructs it sets out to measure in the different sections using MCQs and cloze tests, the construct of the test is different from the reality of language use in university, making it meaningless to the test-takers.

Comprehension, grammar, etc., are not the only aspects of academic English the test-takers will be exposed to in the university, and the disparity in genre expectations may make it difficult for students to socialize in the academic discourse community. Moreover, UTME’s attempt to assess oral proficiency is fundamentally inappropriate. The identification of “correct pronunciation” as described in UTME’s objective can only be done through listening activity which is missing in the test. Therefore, the exam does not measure what it claims to measure and it is impossible to determine if success in UTME’s Use of English may translate to successful use of English for academic purposes.

Finally, JAMB’s failure to provide guidelines for interpreting its test scores leaves the exam open to inconsistencies, biases, and ideological distortions, such as differing cut-off scores that favor some disciplines over others. This practice mars the validity of the UTME exam as a whole.

Authenticity is another area you highlighted earlier on. How does the UTME align with real-world language use in tertiary education?

Unfortunately, the UTME’s Use of English falls short of authenticity. The academic language expectations in the university involve letter writing, classroom discussion, argumentation, etc. While JAMB tries to incorporate academic writing in its summary and comprehension section, one would think that the exam body will be conscious of the different disciplines of the test-takers. Since the test takers’ class grouping (science, arts, commercial) broadly reflects their educational interests and background, using a one-size-fits-all passage to test comprehension can unfairly benefit those whose background or class grouping is better represented in the exam during the year they took it.

Furthermore, the measure of oral proficiency does not correspond with the speaking expectations of test-takers in the TLU domain. In many Nigerian universities, Educated Nigerian English variety is often used on campus and within the formal academic setting. Assessing oral proficiency based on idealized native-speaker content reinforces the native-speaker hegemony. It does not reflect the reality of the TLU and does not promote localized pedagogies that value the local linguistic practices, rather it accentuates a deficit perspective that forces assimilation. Such radical departure from the TLU makes UTME’s Use of English inauthentic.

How do you think the UTME balances its large-scale administration with the other qualities of usefulness?

The use of multiple-choice questions is a practical decision based on the population of test takers, and the transition from paper-based to computer-based is a good development. So I will give it to JAMB in terms of practicality. However, while the prioritization of practicality over reliability, validity, and authenticity, may have ensured efficient testing, it may limit the ability to fully assess candidates’ language proficiency and may be the primary reason for the duplication of tests by admitting institutions.

Given these insights, what recommendations would you make to improve the UTME’s Use of English section?

First, JAMB should address technical and administrative issues to enhance reliability. Second, the test should be redesigned to better reflect the language demands of tertiary education. Third, authenticity can be improved by tailoring test content to reflect the diverse academic backgrounds of candidates. Lastly, there should be clearer guidelines for interpreting scores to reduce ideological biases and inconsistencies.

US University Announces Fully Funded Scholarship for Nigerians

Meanwhile, Legit.ng had earlier reported that an American university had started accepting applications from Nigerians and other international students.

The scholarship is open to all candidates who meet the basic requirements, and it is fully funded, covering all expenses.

.. Source: Legit.ng